We got a new server recently and one of my colleagues ran into an error when restoring a database. The error was a quite generic (reformatted below for readability):

Msg 5133, Level 16, State 1, Line 1 Directory lookup for the file "C:\Users\SQLTwins\Documents\AdventureWorks2014\AdventureWorks2014_Data.mdf" failed with the operating system error 5(Access is denied.). Msg 3156, Level 16, State 3, Line 1 File 'AdventureWorks2014_Data' cannot be restored to 'C:\Users\SQLTwins\Documents\AdventureWorks2014\AdventureWorks2014_Data.mdf'. Use WITH MOVE to identify a valid location for the file.

My immediate reaction was to review the restore script.

USE [master]; GO RESTORE DATABASE [AdventureWorks2014] FROM DISK = 'C:\Program Files\Microsoft SQL Server\MSSQL13.MSSQLSERVER\MSSQL\Backup\AdventureWorks2014.bak' WITH MOVE 'AdventureWorks2014_Data' TO 'C:\Users\SQLTwins\Documents\AdventureWorks2014\AdventureWorks2014_Data.mdf', MOVE 'AdventureWorks2014_Log' TO 'C:\Users\SQLTwins\Documents\AdventureWorks2014\AdventureWorks2014_Log.ldf'; GO

All looked well, I subsequently moved to the environmental aspect of troubleshooting. It was a new server and we had just created the target folders to which the database was to be restored.

We attempted to restore to the default database locations configured during SQL Server installation and the restore worked. So, one thing that became clear: the SQL Server service did not have appropriate security rights on the destination folder.

The Solution

Once we determined that it was the security rights on the destination folder, the only thing remaining was to grant the rights. Here’s how we do it.

- Cross-check the user set as the service user for Microsoft SQL Server database engine (use the SQL Server Configuration Manager for interacting with the SQL Server service – here’s why).

- Under Folder properties, ensure that this user has full security rights (or at least equivalent to the rights assigned on the default database folders specified at the time of installation)

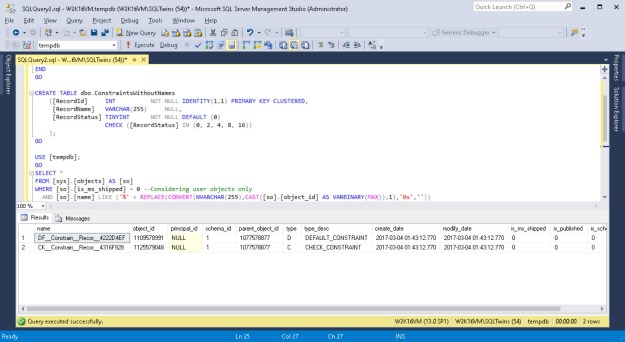

Here’s are detailed screenshots showing the above process.

Identifying the user running the SQL Server Database Engine service

Navigating into file system folder security options to grant access to the SQL Server service

Choosing the appropriate account running the SQL Server service

Applying appropriate rights to folder

By the way: If you encounter similar issues in accessing your backup files, the root cause and solution are the same. Check the permissions on the folders housing your backups and you should see that the database engine does not have the necessary security rights.

Further Reading

Maintaining permissions on data folders and appropriate registry entries is something that is handled by the SQL Server Configuration Manager when you change the service account under which the database engine is running. If you use services.msc (the old way), this is not done and your SQL Server may stop working.

- Changing SQL Server Service Account or Password – Avoid restarting SQL Server [Blog Link]

- Blog Post #0344 – SQL Server – Missing Configuration Manager on Windows 8 [Blog Link]

Until we meet next time,

Be courteous. Drive responsibly.