We a colleague of mine used Database Diagrams to explain our database structure to a new team member. Now typically, we would have started to “draw” the relationships on a white board, but in a world where everyone was working remotely, this was the only option.

The Visual Aspect

I am sure almost everyone in the audience has also used database diagrams at some point as a means of database documentation before switching to methods (like Extended Properties) more suited to modern database development and deployment techniques.

Unfortunately, these techniques do not have the ability to demonstrate the relationships visually. Database diagrams provide this unique ability and hence, warrant a rethink in terms of usage.

Now, the goal of this post is not to show “how” to build a database diagram, but to:

- Demonstrate effective ways of providing as much detail as possible on the diagram

- Show how to export the diagram for offline reference

Increasing the level of detail in a Database Diagram

For effective database diagraming, the recommendation is to group the tables/information shown on the diagram by one of the following two (2) strategies:

- By schema: If you use database schemas, group objects by schema and have at least one database diagram per schema

- By Use-case: Alternatively, tables that are related from a domain perspective (or for a particular use-case, e.g. Authentication) can be selected and be the subject of a diagram

Once you have put all the required tables on the diagram, you may want to right-click on the canvas and choose “Show Relationship Labels”. Additional annotations may also be applied as necessary by using the “New Text Annotation” functionality.

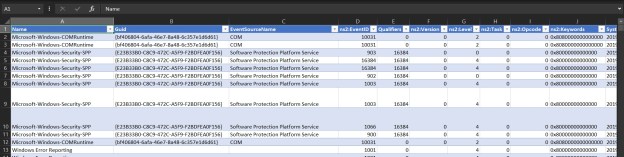

By default, the database diagram will only show the table name and list of columns. For maximum details, you can right-click on the table name -> select “Table View” -> select “Standard”.

As you will notice, using the “Standard” table view will add more details (like datatype and null-ability of columns) on the diagram. The columns can be added/removed by using the “Modify Column” option of the same menu.

This process will need to be done for all tables. Once done, arrange the diagram on the canvas manually.

Exporting the Database Diagram

Once a database diagram is prepared, it can be saved in the database. However, there is no way to export or save a diagram into a file that can be sent via E-mail or stored on a collaboration tool for offline viewing. There is however, a very simple way by which the ultimate goal can be achieved – by storing it as an image!

Now, I am now talking about taking multiple screenshots and stitching them together in an image editing app. It is very simple to copy the diagram as an image.

Simply right-click on the canvas and choose “Copy Diagram to Clipboard”

Once the diagram is on the clipboard, it can be pasted as an image to any image editing application or document!

Further Reading

- Creating Database Diagrams in SQL Server: https://docs.microsoft.com/en-us/sql/ssms/visual-db-tools/create-a-new-database-diagram-visual-database-tools?view=sql-server-ver15

- A guide to using Extended Properties for database documentation from Phil Factor: https://www.red-gate.com/simple-talk/sql/database-delivery/scripting-description-database-tables-using-extended-properties/

I trust this little tip comes to your assistance someday.

Until we meet next time,

Be courteous. Drive responsibly.