Database schema design involves defining the clustered keys (generally the primary key) on a table, and one of the main decisions to be taken is whether to use a clustered key based on a UNIQUEIDENTIFIER/ROWGUID or an INTEGER?

Generally, an INTEGER is a default choice for the clustered key column. Compared to an INTEGER, a GUID takes up a lot of space (36 characters!). Hence the decision to use UNIQUEIDENTIFIER/ROWGUID depends a lot upon the desired application:

- Whether the amount of data to be stored in the table is ever going to exceed the limits of an integer key value?

- The code which is going to consume the data (it helps if the underlying storage uses keys in the same format as the code, as in the case of the .NET Enterprise Framework)

- Nature of integration/DR implemented (e.g. External Id keys used in the integrated system, replication, etc)

If the requirements do require that UNIQUEIDENTIFIER is to be used, the next question is:

What is better to use as the default value for a GUID? NEWID or NEWSEQUENTIALID?

NEWSEQUENTIALID() and NEWID() both generate GUIDs, however NEWSEQUENTIALID() has certain advantages:

- NEWID() generates a lot of random activity, whereas NEWSEQUENTIALID() does not. Hence, NEWSEQUENTIALID() is faster

- Because NEWSEQUENTIALID() is sequential, it helps to fill the data pages faster

In order words,

NEWID() generates more fragmentation when compared to NEWSEQUENTIALID()

NEWID() generates more fragments

To test this, I ran the following test:

- Create two tables – one with NEWID() as the default value for the key and one with NEWSEQUENTIALID()

- Ensure that MAXDOP is set to 0 so that I can insert data into the tables in parallel (to simulate inputs into a table from multiple threads)

- Repeat this process multiple times

- Once the insert is complete, check the average fragmentation on the tables

Allow me to run through the test step-by-step.

The script below creates two tables:

USE tempdb;

GO

SET NOCOUNT ON;

GO

--Safety Check

IF OBJECT_ID('dbo.SequentialIdCheck','U') IS NOT NULL

BEGIN

DROP TABLE dbo.SequentialIdCheck;

END

GO

IF OBJECT_ID('dbo.NonSequentialIdCheck','U') IS NOT NULL

BEGIN

DROP TABLE dbo.NonSequentialIdCheck;

END

GO

--Create the tables (SequentialId Check)

CREATE TABLE dbo.SequentialIdCheck (RowId UNIQUEIDENTIFIER NOT NULL

CONSTRAINT df_SequentialRowId

DEFAULT NEWSEQUENTIALID(),

ObjectId INT NOT NULL,

RowValue NVARCHAR(200) NULL,

CONSTRAINT pk_SequentialIdCheck

PRIMARY KEY CLUSTERED (RowId)

);

GO

--Create the tables (Non SequentialId Check)

CREATE TABLE dbo.NonSequentialIdCheck (RowId UNIQUEIDENTIFIER NOT NULL

CONSTRAINT df_NonSequentialRowId

DEFAULT NEWID(),

ObjectId INT NOT NULL,

RowValue NVARCHAR(200) NULL,

CONSTRAINT pk_NonSequentialIdCheck

PRIMARY KEY CLUSTERED (RowId)

);

GO

Now, I will ensure that max degree of parallelism is turned ON.

sp_configure 'show advanced options',1 RECONFIGURE GO sp_configure 'max degree of parallelism',0 RECONFIGURE GO

Next, I will insert some test data. I will repeat the insert multiple times to simulate an extremely large data-set over multiple inserts.

USE tempdb;

GO

--Insert some test data

--Run the insert 5 times

INSERT INTO dbo.SequentialIdCheck (ObjectId, RowValue)

SELECT sao1.[object_id] AS [ObjectId],

sao1.[name] AS [RowValue]

FROM sys.all_objects AS sao1

CROSS JOIN sys.all_objects AS sao2;

INSERT INTO dbo.NonSequentialIdCheck (ObjectId, RowValue)

SELECT sao1.[object_id] AS [ObjectId],

sao1.[name] AS [RowValue]

FROM sys.all_objects AS sao1

CROSS JOIN sys.all_objects AS sao2;

GO 5

Beginning execution loop Batch execution completed 5 times.

Finally, I check the average fragmentation on the tables by checking the fragmentation of the clustered index.

USE tempdb;

GO

--Check the fragmentation

SELECT OBJECT_NAME(ps.[object_id]) AS ObjectName,

ps.[index_type_desc],

ps.[avg_fragmentation_in_percent],

ps.[fragment_count],

ps.[page_count],

ps.[database_id],

ps.[object_id]

FROM sys.dm_db_index_physical_stats(DB_ID('tempdb'),

OBJECT_ID('dbo.SequentialIdCheck'),

DEFAULT,

DEFAULT,

DEFAULT

) AS ps;

GO

SELECT OBJECT_NAME(ps.[object_id]) AS ObjectName,

ps.[index_type_desc],

ps.[avg_fragmentation_in_percent],

ps.[fragment_count],

ps.[page_count],

ps.[database_id],

ps.[object_id]

FROM sys.dm_db_index_physical_stats(DB_ID('tempdb'),

OBJECT_ID('dbo.NonSequentialIdCheck'),

DEFAULT,

DEFAULT,

DEFAULT

) AS ps;

GO

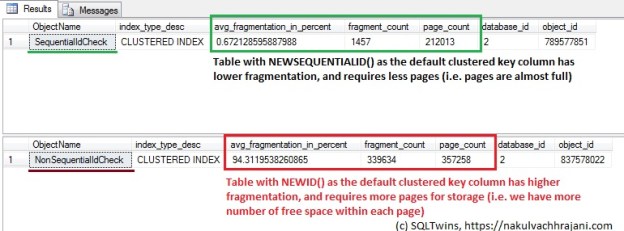

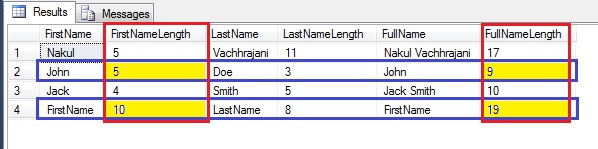

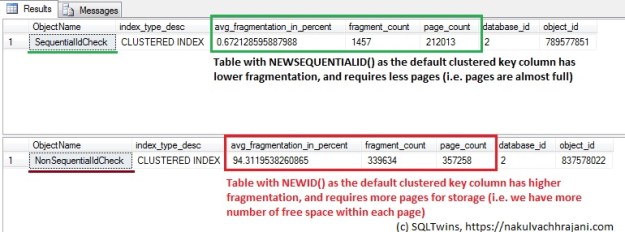

NEWID() results in higher fragmentation and higher pages consumed on disk.

As can be seen clearly from the screenshot above, we see that the table with the NEWID() default key value has a higher fragmentation value when compared to the table with NEWSEQUENTIALID().

We also see that the table with NEWID() default key value has taken a lot of pages – resulting in more space being occupied on disk.

The underlying reason for this is that NEWSEQUENTIALID() generates GUIDs that are in sequence for the given batch, whereas the GUIDs generated by NEWID() are random. When used as a clustered key, having values in sequence helps fill the pages faster and reduce fragmentation.

Conclusion

In most cases, an INTEGER based key on a table is sufficient. However, when a GUID is required by design, it is important to keep in mind that using NEWID() causes more fragmentation in the underlying data resulting in poor system performance. Because non-sequential GUIDs cause fragmentation, they are (generally) not a good choice for using as a clustered index key unless it is required to do so by the business/application design.

If NEWSEQUENTIALID() is to be used, please do keep in mind that the keys need to be generated by the database engine making it tricky when using with Entity Frameworks where the key value is required by the code in order to instantiate an entity.

Further Reading

Until we meet next time,

Be courteous. Drive responsibly.